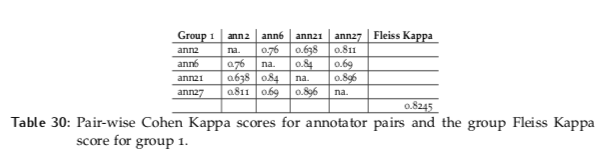

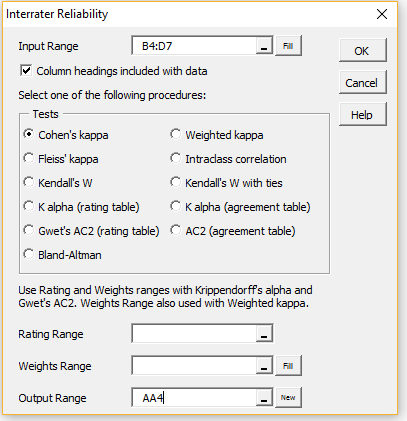

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

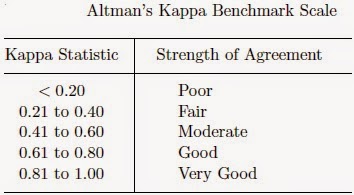

K. Gwet's Inter-Rater Reliability Blog : Benchmarking Agreement CoefficientsInter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

report kappa, report, 1913 . Aubrey D. Kelly. The Delta Kappa Phi Fraternity Prize, $10.00—For best executedwork on the Hand Harness Loom. First year classes. Awarded toWilliam B. Scatchard. Honorable mention to -

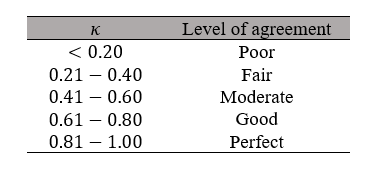

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table3-1.png)

![PDF] Understanding interobserver agreement: the kappa statistic. | Scinapse PDF] Understanding interobserver agreement: the kappa statistic. | Scinapse](https://asset-pdf.scinapse.io/prod/1444168786/figures/table-3.jpg)